Let’s be honest — most of us think we’re better readers than we actually are. You sit down with a book or a financial report, glide through a few pages, and suddenly realize you remember almost nothing. It’s not that you’re distracted. It’s that reading, like engineering, is a system. And if you don’t understand how its parts work together, you lose the thread before you even notice.

Here’s the thing: engineers have mastered the art of making sense of chaos. They look at tangled systems, see the invisible relationships, and turn complexity into clarity. That’s what systems thinking is about. And it’s exactly what your brain needs if you want to read not just faster, but deeper.

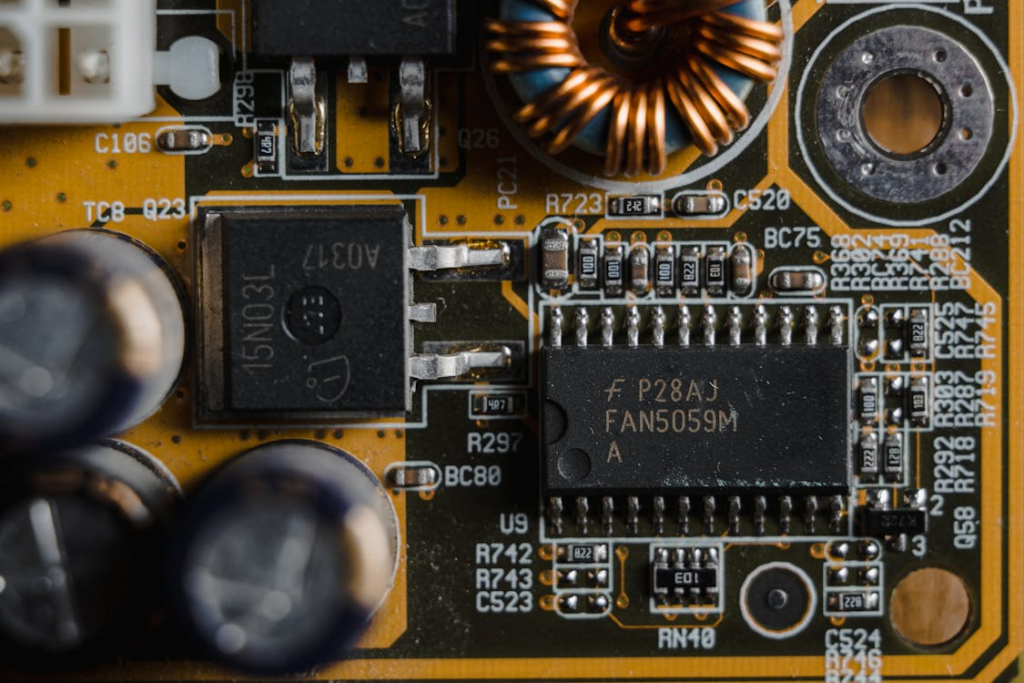

You can see this mindset in companies like OurPCB, where engineers constantly analyze how tiny components interact to create powerful circuits. Reading works the same way — sentences are circuits, ideas are signals, and your understanding is the current that flows through them.

Image from Pexels

Wait, what exactly is systems thinking?

Imagine standing in the middle of a city you’ve never visited. Cars, people, lights — everything moves with its own logic. Systems thinking teaches you not just to see what’s in front of you, but to trace how it all connects. It’s about relationships, not just components.

In simple terms, it’s the ability to step back and notice patterns between things. Engineers use it to predict how changing one variable affects an entire system. Readers can use it to grasp how one sentence shapes a paragraph, how one argument supports a conclusion, how one story connects to another in your own life.

It’s not fancy theory; it’s practical awareness. When you start reading like a systems engineer, you stop treating words like separate dots. You start seeing the web that links them.

The secret overlap between engineers and great readers

Think about how an engineer troubleshoots a problem. They don’t just stare at one broken wire; they look at the network it’s in. Good readers do the same thing with meaning.

Here’s what both groups share:

- Pattern recognition: Engineers look for signal flow; readers look for narrative flow.

- Feedback awareness: Engineers check if their designs respond well to input; readers notice when ideas loop back or reinforce earlier points.

- Testing hypotheses: Engineers ask, “If I tweak this, what happens?” Readers ask, “If the author means this, what follows?”

This overlap matters because it shifts reading from passive to active. You’re no longer absorbing text. You’re running a live experiment inside your head.

Reading as mental engineering

Here’s a fun thought: every time you read, your brain is building a circuit. Words act like tiny resistors or conductors, shaping the flow of meaning. If a sentence confuses you, that’s a weak connection. If a story resonates, that’s a circuit firing smoothly.

So what can you learn from engineers here?

They don’t panic when a system doesn’t work. They map it. They sketch it out. They ask, “Where’s the bottleneck?” You can do the same when reading something dense — maybe it’s a tough financial model or a classic essay. Sketch the flow of ideas. Draw arrows between concepts. It’s not childish; it’s cognitive design.

Honestly, some of the most effective readers I know treat a paragraph like a motherboard. They track connections, isolate meaning, and test interpretations. It’s not about speed; it’s about architecture.

The “OurPCB principle” of clarity

OurPCB’s engineers don’t design circuits at random. They visualize every layer, trace every signal path, and anticipate failure points before they happen. Imagine applying that to reading. Instead of just highlighting quotes, you’d predict how a chapter will resolve. You’d test whether an argument supports its claim before accepting it.

There’s an elegance to that mindset — not rigid, but deliberate. Systems thinking helps you move from “What is this saying?” to “How does this fit into the larger structure?” That’s the real upgrade to comprehension. It’s the difference between memorizing and understanding.

You know what? This approach even helps with emotional reading — novels, essays, memoirs. Because systems thinking isn’t cold logic. It’s empathy with structure. When you notice how an author builds tension or releases emotion, you’re reading like an engineer listening to a signal. You’re attuned.

How to read with a systems mindset (without overcomplicating it)

Alright, let’s get practical. You don’t need a circuit board or a math degree. You just need a small shift in how you approach the page.

Try this:

- Spot the relationships, not just the facts. Ask yourself, “How does this idea connect to the last one?”

- Notice feedback loops. When a point repeats or evolves, that’s your clue it’s important.

- Zoom in, then zoom out. Engineers switch between micro and macro views constantly. Do the same — sentence, paragraph, whole text.

- Sketch the system. Even a quick diagram can reveal where meaning breaks down.

- Anticipate flow. Before turning the page, guess where the argument’s going. Then see if you were right.

This isn’t about adding work. It’s about creating a rhythm — active, curious, and connected.

Why your brain loves this approach

Here’s something fascinating. Neuroscientists say comprehension lights up the same brain areas as problem-solving. So when you read with systems thinking, you’re literally feeding your brain what it craves: patterns and prediction.

That’s why this method feels more engaging. It turns reading from a linear grind into a living puzzle. Each paragraph becomes a gear that fits into a larger machine. And when that machine clicks, your brain releases that satisfying “aha” spark — the same dopamine hit engineers feel when a design finally works.

Also, it sticks. Because meaning that’s built, not memorized, lasts longer. You retain it the way you remember how to ride a bike — as a process, not a list.

But wait, doesn’t this make reading feel mechanical?

Good question. It might sound like systems thinking removes the magic from reading. But the truth is the opposite. When you understand how the gears turn, you appreciate the beauty of the movement even more.

Think of a musician who studies composition. They still feel the music, but now they also see its structure. Systems thinking adds dimension, not detachment. It lets you enjoy both the melody and the mechanics.

And yes, sometimes the system breaks. You’ll misread a metaphor, miss a pattern, or hit a paragraph that refuses to click. That’s fine. Engineers fail forward all the time. The point isn’t perfection; it’s curiosity.

From complexity to calm

In a world where information floods every channel (see what I did there?), clarity feels rare. Systems thinking offers a quiet counterbalance — a way to slow down and connect dots intentionally. It’s how you move from confusion to comprehension, from scattered notes to symphony.

And maybe that’s what we’ve been missing about reading. It’s not just a skill or a habit. It’s a design problem — one we can actually solve.

So next time you open a book or a market report, try seeing it like a system. Notice the flow, trace the links, test the loops. You’ll be surprised how much more you retain, and how much calmer your mind feels when every idea finally fits.

Final Thought: Reading as an act of engineering empathy

There’s something poetic about this crossover between logic and language. Engineers build systems that work. Readers build systems that mean. When you combine both, you get an understanding that’s not just intellectual, but emotional — like the moment when a story, a theory, or even a financial model suddenly makes sense.

Systems thinking doesn’t replace creativity or intuition. It strengthens them. It teaches you to see structure in chaos, flow in noise, and sense in symbols. That’s how engineers think. And that’s how great readers grow.

So, grab your next book like you’d grab a toolkit. Not to fix it, but to explore it. Reading isn’t about turning pages anymore. It’s about turning on connections.